Cloud computing logical diagram

Cloud computing is becoming one of the next industry buzz words. It joins the ranks of terms including: grid computing, utility computing, virtualization, clustering, etc.

Cloud computing overlaps some of the concepts of distributed, grid and utility computing, however it does have its own meaning if contextually used correctly. The conceptual overlap is partly due to technology changes, usages and implementations over the years.

Cloud computing provides computation, software, data access, and storage services that do not require end-user knowledge of the physical location and configuration of the system that delivers the services. Parallels to this concept can be drawn with the electricity grid, wherein end-users consume power without needing to understand the component devices or infrastructure required to provide the service.

The concept of cloud computing fills a perpetual need of IT: a way to increase capacity or add capabilities on the fly without investing in new infrastructure, training new personnel, or licensing new software. Cloud computing encompasses any subscription-based or pay-per-use service that, in real time over the Internet, extends IT's existing capabilities.

Cloud computing describes a new supplement, consumption, and delivery model for IT services based on Internet protocols, and it typically involves provisioning of dynamically scalable and often virtualized resources. It is a byproduct and consequence of the ease-of-access to remote computing sites provided by the Internet. This may take the form of web-based tools or applications that users can access and use through a web browser as if the programs were installed locally on their own computers.

Cloud computing providers deliver applications via the internet, which are accessed from a web browser, while the business software and data are stored on servers at a remote location. In some cases, legacy applications (line of business applications that until now have been prevalent in thin client Windows computing) are delivered via a screen-sharing technology, while the computing resources are consolidated at a remote data center location; in other cases, entire business applications have been coded using web-based technologies such as AJAX.

Most cloud computing infrastructures consist of services delivered through shared data-centers and appearing as a single point of access for consumers' computing needs. Commercial offerings may be required to meet service-level agreements (SLAs), but specific terms are less often negotiated by smaller companies.

Comparison

Cloud computing shares characteristics with:

§ Autonomic computing — Computer systems capable of self-management.

§ Client–server model — Client–server computing refers broadly to any distributed application that distinguishes between service providers (servers) and service requesters (clients).

§ Grid computing — "A form of distributed and parallel computing, whereby a 'super and virtual computer' is composed of a cluster of networked, loosely coupled computers acting in concert to perform very large tasks."

§ Mainframe computer — Powerful computers used mainly by large organizations for critical applications, typically bulk data processing such as census, industry and consumer statistics, enterprise resource planning, and financial transaction processing.

§ Utility computing — The "packaging of computing resources, such as computation and storage, as a metered service similar to a traditional public utility, such as electricity."

§ Peer-to-peer — Distributed architecture without the need for central coordination, with participants being at the same time both suppliers and consumers of resources (in contrast to the traditional client–server model).

§ Service-oriented computing – software-as-a-service.

Characteristics

Cloud computing exhibits the following key characteristics:

§ Agility improves with users' ability to re-provision technological infrastructure resources.

§ Application programming interface (API) accessibility to software that enables machines to interact with cloud software in the same way the user interface facilitates interaction between humans and computers. Cloud computing systems typically use REST-based APIs.

§ Cost is claimed to be reduced and in a public cloud delivery model capital expenditure is converted to operational expenditure. This is purported to lower barriers to entry, as infrastructure is typically provided by a third-party and does not need to be purchased for one-time or infrequent intensive computing tasks. Pricing on a utility computing basis is fine-grained with usage-based options and fewer IT skills are required for implementation (in-house).

§ Device and location independence enable users to access systems using a web browser regardless of their location or what device they are using (e.g., PC, mobile phone). As infrastructure is off-site (typically provided by a third-party) and accessed via the Internet, users can connect from anywhere.

§ Multi-tenancy enables sharing of resources and costs across a large pool of users thus allowing for:

§ Centralization of infrastructure in locations with lower costs (such as real estate, electricity, etc.)

§ Peak-load capacity increases (users need not engineer for highest possible load-levels)

§ Utilization and efficiency improvements for systems that are often only 10–20% utilized.

§ Reliability is improved if multiple redundant sites are used, which makes well-designed cloud computing suitable for business continuity and disaster recovery.

§ Scalability and Elasticity via dynamic ("on-demand") provisioning of resources on a fine-grained, self-service basis near real-time, without users having to engineer for peak loads.

§ Performance is monitored, and consistent and loosely coupled architectures are constructed using web services as the system interface.

§ Security could improve due to centralization of data, increased security-focused resources, etc., but concerns can persist about loss of control over certain sensitive data, and the lack of security for stored kernels. Security is often as good as or better than under traditional systems, in part because providers are able to devote resources to solving security issues that many customers cannot afford. However, the complexity of security is greatly increased when data is distributed over a wider area or greater number of devices and in multi-tenant systems that are being shared by unrelated users. In addition, user access to security audit logs may be difficult or impossible. Private cloud installations are in part motivated by users' desire to retain control over the infrastructure and avoid losing control of information security.

§ Maintenance of cloud computing applications is easier, because they do not need to be installed on each user's computer.

The term "cloud" is used as a metaphor for the Internet, based on the cloud drawing used in the past to represent the telephone network,[ and later to depict the Internet in computer network diagrams as an abstraction of the underlying infrastructure it represents.[

Cloud computing is a natural evolution of the widespread adoption of virtualization, service-oriented architecture, autonomic, and utility computing. Details are abstracted from end-users, who no longer have need for expertise in, or control over, the technology infrastructure "in the cloud" that supports them.

The underlying concept of cloud computing dates back to the 1960s, when John McCarthy opined that "computation may someday be organized as a public utility." Almost all the modern-day characteristics of cloud computing (elastic provision, provided as a utility, online, illusion of infinite supply), the comparison to the electricity industry and the use of public, private, government, and community forms, were thoroughly explored in Douglas Parkhill's 1966 book, The Challenge of the Computer Utility.

The actual term "cloud" borrows from telephony in that telecommunications companies, who until the 1990s offered primarily dedicated point-to-point data circuits, began offering Virtual Private Network (VPN) services with comparable quality of service but at a much lower cost. By switching traffic to balance utilization as they saw fit, they were able to utilize their overall network bandwidth more effectively. The cloud symbol was used to denote the demarcation point between that which was the responsibility of the provider and that which was the responsibility of the user. Cloud computing extends this boundary to cover servers as well as the network infrastructure.

After the dot-com bubble, Amazon played a key role in the development of cloud computing by modernizing their data centers, which, like most computer networks, were using as little as 10% of their capacity at any one time, just to leave room for occasional spikes. Having found that the new cloud architecture resulted in significant internal efficiency improvements whereby small, fast-moving "two-pizza teams" could add new features faster and more easily, Amazon initiated a new product development effort to provide cloud computing to external customers, and launched Amazon Web Service (AWS) on a utility computing basis in 2006.In early 2008, Eucalyptus became the first open-source, AWS API-compatible platform for deploying private clouds. In early 2008, OpenNebula, enhanced in the RESERVOIR European Commission-funded project, became the first open-source software for deploying private and hybrid clouds, and for the federation of clouds. In the same year, efforts were focused on providing QoS guarantees (as required by real-time interactive applications) to cloud-based infrastructures, in the framework of the IRMOS European Commission-funded project, resulting to a real-time cloud environment. By mid-2008, Gartner saw an opportunity for cloud computing "to shape the relationship among consumers of IT services, those who use IT services and those who sell them" and observed that "[o]rganisations are switching from company-owned hardware and software assets to per-use service-based models" so that the "projected shift to cloud computing ... will result in dramatic growth in IT products in some areas and significant reductions in other areas."

In July 2010, OpenStack was announced, attracting nearly 100 partner companies and over a thousand code contributions in its first year, making it the fastest-growing free and open sourcesoftware project in history.

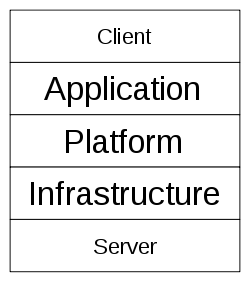

Once an internet protocol connection is established among several computers, it is possible to share services within any one of the following layers.

Client

See also: Category:Cloud clients

A cloud client consists of computer hardware and/or computer software that relies on cloud computing for application delivery and that is in essence useless without it. Examples include some computers, phones and other devices, operating systems, and browsers.[32][33][34]

Application

See also: Category:Cloud applications

Cloud application services or "Software as a Service (SaaS)" deliver software as a service over the Internet, eliminating the need to install and run the application on the customer's own computers and simplifying maintenance and support.

Platform

See also: Category:Cloud platforms

Cloud platform services, also known as platform as a service (PaaS), deliver a computing platform and/or solution stack as a service, often consuming cloud infrastructure and sustaining cloud applications. It facilitates deployment of applications without the cost and complexity of buying and managing the underlying hardware and software layers.

Infrastructure

See also: Category:Cloud infrastructure

Cloud infrastructure services, also known as "infrastructure as a service" (IaaS), deliver computer infrastructure typically a platform virtualization environment – as a service, along with raw (block) storage and networking. Rather than purchasing servers, software, data-center space or network equipment, clients instead buy those resources as a fully outsourced service. Suppliers typically bill such services on a utility computing basis; the amount of resources consumed (and therefore the cost) will typically reflect the level of activity.

Server

The servers layer consists of computer hardware and/or computer software products that are specifically designed for the delivery of cloud services, including multi-core processors, cloud-specific operating systems and combined offerings.

Cloud computing types

Public cloud

Public cloud describes cloud computing in the traditional mainstream sense, whereby resources are dynamically provisioned to the general public on a fine-grained, self-service basis over the Internet, via web applications/web services, from an off-site third-party provider who bills on a fine-grained utility computing basis.

Community cloud

Community cloud shares infrastructure between several organisations from a specific community with common concerns (security, compliance, jurisdiction, etc.), whether managed internally or by a third-party and hosted internally or externally. The costs are spread over fewer users than a public cloud (but more than a private cloud), so only some of the benefits of cloud computing are realised.

Hybrid cloud

Hybrid cloud is a composition of two or more clouds (private, community, or public) that remain unique entities but are bound together, offering the benefits of multiple deployment models.

Private cloud

Private cloud is infrastructure operated solely for a single organization, whether managed internally or by a third-party and hosted internally or externally.

They have attracted criticism because users "still have to buy, build, and manage them" and thus do not benefit from lower up-front capital costs and less hands-on management, essentially "[lacking] the economic model that makes cloud computing such an intriguing concept".

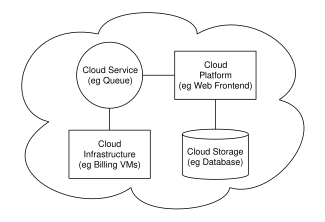

Cloud computing sample architecture

Cloud architecture, the systems architecture of the software systems involved in the delivery of cloud computing, typically involves multiple cloud components communicating with each other over a loose coupling mechanism such as a messaging queue.

The Intercloud

Main article: Intercloud

The Intercloud is an interconnected global "cloud of clouds" and an extension of the Internet "network of networks" on which it is based.

Cloud engineering

Cloud engineering is the application of engineering disciplines to cloud computing. It brings a systematic approach to the high level concerns of commercialization, standardization, and governance in conceiving, developing, operating and maintaining cloud computing systems. It is a multidisciplinary method encompassing contributions from diverse areas such as systems, software, web, performance,information, security, platform, risk, and quality engineering.

Privacy

The cloud model has been criticized by privacy advocates for the greater ease in which the companies hosting the cloud services control, and, thus, can monitor at will, lawfully or unlawfully, the communication and data stored between the user and the host company. Instances such as the secret NSA program, working with AT&T, and Verizon, which recorded over 10 million phone calls between American citizens, causes uncertainty among privacy advocates, and the greater powers it gives to telecommunication companies to monitor user activity. While there have been efforts (such as US-EU Safe Harbor) to "harmonize" the legal environment, providers such as Amazon still cater to major markets (typically the United States and the European Union) by deploying local infrastructure and allowing customers to select "availability zones."

Compliance

In order to obtain compliance with regulations including FISMA, HIPAA, and SOX in the United States, the Data Protection Directive in the EU and the credit card industry's PCI DSS, users may have to adopt community or hybrid deployment modes that are typically more expensive and may offer restricted benefits. This is how Google is able to "manage and meet additional government policy requirements beyond FISMA" and Rackspace Cloud or QubeSpace are able to claim PCI compliance.

Many providers also obtain SAS 70 Type II certification, but this has been criticised on the grounds that the hand-picked set of goals and standards determined by the auditor and the auditee are often not disclosed and can vary widely. Providers typically make this information available on request, under non-disclosure agreement.

Customers in the EU contracting with cloud providers established outside the EU/EEA have to adhere to the EU regulations on export of personal data

Legal

|

|

An editor has expressed a concern that this article lends undue weight to certain ideas, incidents, controversies or matters relative to the article subject as a whole. Please help to create a more balanced presentation. Discuss and resolve this issue before removing this message.(September 2011)

|

In March 2007, Dell applied to trademark the term "cloud computing" (U.S. Trademark 77,139,082) in the United States. The "notice of allowance" the company received in July 2008 was canceled in August, resulting in a formal rejection of the trademark application less than a week later. Since 2007, the number of trademark filings covering cloud computing brands, goods, and services has increased rapidly. As companies sought to better position themselves for cloud computing branding and marketing efforts, cloud computing trademark filings increased by 483% between 2008 and 2009. In 2009, 116 cloud computing trademarks were filed, and trademark analysts predict that over 500 such marks could be filed during 2010.

Other legal cases may shape the use of cloud computing by the public sector. On October 29, 2010, Google filed a lawsuit against the U.S. Department of Interior, which opened up a bid for software that required that bidders use Microsoft's Business Productivity Online Suite. Google sued, calling the requirement "unduly restrictive of competition." Scholars have pointed out that, beginning in 2005, the prevalence of open standards and open source may have an impact on the way that public entities choose to select vendors.

There are also concerns about a cloud provider shutting down for financial or legal reasons, which has happened in a number of cases.

Most transitions to a cloud computing solution entail a change from a technically managed solution to a contractually managed solution. This change necessitates increased IT contract negotiation skills to establish the terms of the relationship and vendor management skills to maintain the relationship. All rights and responsibilities that are associated with the relationship between a client and a cloud computing services provider must be codified in the contract and effectively managed until the relationship has been terminated. The specific risks and issues to be addressed in your contract with a cloud provider will vary on a case by case basis, depending upon your specific use needs. Key risks and issues that are either unique to cloud computing or essential to its effective adoption typically involve service level agreements; data processing and access; provider infrastructure and security; and contract and vendor management. It is essential to ensure that each of these key risks and issues is effectively evaluated and addressed in your contract with a cloud provider.

The NIST definition categorizes cloud computing into three service models: software as a service (SaaS), infrastructure as a services (IaaS) and platform as a service (PaaS). A key common word here is "service", so one of the key issues to consider when negotiating and managing your contract with a cloud provider is the level of service that will be required to meet your needs. Since much of the relationship between an organization and a cloud computing provider will be contractually governed, it is important for the contract to include service-level agreements (SLAs) stating specific parameters and minimum levels for each element of the service provided. The SLAs must be enforceable and state specific remedies that apply when they are not met. Aspects of cloud computing services where SLAs may be pertinent include: service availability, performance and response time, error correction time; and latency. The specific definitions of pertinent SLA terms in a contract are important as well. Such definitions in standard cloud provider contracts often provide a very narrow way of measuring SLA parameters. For example, these contracts may define "downtime" so as to exclude any time that service is unavailable due to maintenance that was scheduled or announced in advance. Calculation of downtime might be restricted to a minimum number of consecutive minutes or a minimum percentage error rate. Downtime could be measured by spreading it over a specified time period such as a week or a month. Such clauses can collectively result in a fairly narrow definition of total downtime. Contracts should state specific remedies, such as corrections or penalties, for when SLAs are not met. Corrections codify what steps a cloud provider must take to prevent a future failure to meet an SLA. Penalties often take the form of a financial credit, so it's important to codify when and how a credit will be provided. The goal of such penalties is not to get credits but to motivate the supplier to provide the required level of service. Other ways to motivate appropriate performance include reputational penalties (a full-page ad in The New York Times announcing missed service levels can be a strong motivator) and rewards for exceeding service levels. Once you've effectively negotiated your SLA language in the contract and you've adopted the cloud service, your work is not done. You must then begin to continually monitor the cloud provider's performance to ensure that they continue to meet those SLAs until such time as the contract is terminated and you move to an alternative solution.

The virtual nature of cloud computing makes it easy to forget that the service depends on a physical data center. All cloud computing vendors are not created equal; there are both new and established vendors in this market space, so they don't all have the same knowledge and infrastructure in place. To ensure that you select a cloud provider that has well run, efficiently structured data centers, it's important that an organization take steps to understand and verify the infrastructure operations management proceses and mechanisms that the cloud provider has in place. A good starting point in gathering this information can be to use a questionnaire. The Cloud Security Alliance's Consensus Assessments Initiative Questionnaire serves as one good example which to leverage to build your own questionnaire. Examples of key areas to evaluate include: capacity and resource planning; data replications, storage, distribution and recovery; change management policies and procedures; virtual server provisioning and management; asset inventory and management policies and procedures; and software development quality assurance. Once you've identified the appropriate practices, you can determine which address your specific needs, then codify them in the contract. One easy way to do this is to incorporate the cloud provider's responses into the contract as the minimum requirements for the cloud provider to meet in providing their service to you.

There have been a number of prominent data security breaches recently, all of which serve to demonstrate one of the risks common to any cloud service adoption: The cloud provider may not handle your data as securely as you would like. When you use any cloud computing service, you are trusting it with information, whether that be personal, regulated, proprietary or otherwise sensitive information. In doing so, you lose some of the control, or at least perceived control, that you had when you did the same things yourself. The first step to take in mitigating this risk is reading and understanding the cloud provider’s standard terms and conditions. The next step is to obtain as much knowledge as possible about the mechanisms and processes that the cloud provider has in place to keep your information secure. One way to obtain this knowledge is to leverage a questionnaire approach as noted above. Another option is to review the cloud provider's own documentation of their information security practices and processes. Some key information security issues to consider investigating in this process include: secure gateway environment; audit/penetration tests & reports; security monitoring systems; multitenancy data segregation; identity and access management; and encryption. Once you've identified the cloud provider's standard practices, compare those to your own needs relative to the type and sensitivity of the information you'll be entrusting to the cloud. Determine which mechanisms, practices and processes are the most important to meet your specific needs, and negotiate to codify them in the contract.

Open source

See also: Category:Open source cloud computing

Open-source software has provided the foundation for many cloud computing implementations, one prominent example being the Hadoop framework. In November 2007, the Free Software Foundation released the Affero General Public License, a version of GPLv3 intended to close a perceived legal loophole associated with free software designed to be run over a network.

Open standards

See also: Category:Cloud standards

Most cloud providers expose APIs that are typically well-documented (often under a Creative Commons license) but also unique to their implementation and thus not interoperable. Some vendors have adopted others' APIs and there are a number of open standards under development, with a view to delivering interoperability and portability.

Security

Main article: Cloud computing security:

As cloud computing is achieving increased popularity, concerns are being voiced about the security issues introduced through adoption of this new model. The effectiveness and efficiency of traditional protection mechanisms are being reconsidered as the characteristics of this innovative deployment model differ widely from those of traditional architectures.

The relative security of cloud computing services is a contentious issue that may be delaying its adoption. Issues barring the adoption of cloud computing are due in large part to the private and public sectors unease surrounding the external management of security based services. It is the very nature of cloud computing based services, private or public, that promote external management of provided services. This delivers great incentive among cloud computing service providers in producing a priority in building and maintaining strong management of secure services. Security issues have been categorized into sensitive data access, data segregation, privacy, bug exploitation, recovery, accountability, malicious insiders, management console security, account control, and multi-tenancy issues. Solution to various cloud security issues vary through cryptography, particularly public key infrastructure (PKI), use of multiple cloud providers, standardization of APIs, improving virtual machine support and legal support.

Sustainability

Although cloud computing is often assumed to be a form of "green computing" there is as of yet no published study to substantiate this assumption. Siting the servers affects the environmental effects of cloud computing. In areas where climate favors natural cooling and renewable electricity is readily available, the environmental effects will be more moderate. Thus countries with favorable conditions, such as Finland, Sweden and Switzerland, are trying to attract cloud computing data centers.

Abuse

As with privately purchased hardware, crackers posing as legitimate customers can purchase the services of cloud computing for nefarious purposes. This includes password cracking and launching attacks using the purchased services. In 2009, a banking trojan illegally used the popular Amazon service as a command and control channel that issued software updates and malicious instructions to PCs that were infected by the malware.

Many universities, vendors and government organizations are investing in research around the topic of cloud computing:

§ In October 2007 the Academic Cloud Computing Initiative (ACCI) was announced as a multi-university project designed to enhance students' technical knowledge to address the challenges of cloud computing.